.4.1 Patricia Reed: Politics and Complexity: Epistemology and Machinic Coupling

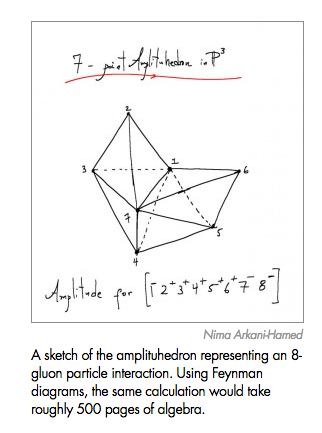

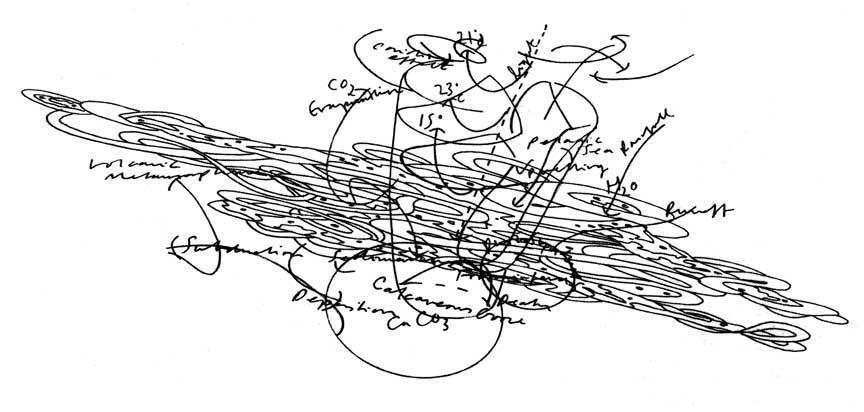

Patricia Reed’s talk takes place within the locus of ‘epistepolitics’, a forking of Foucault towards politicization of the ramification of knowledge, through pursuit of tools and apparatus of ‘framing, unframing, and reframing’ in Pete’s terms, in order to rethink and ramify ‘epistemology’ to “address the scale of what we are faced with having to think.” In opposition stands the threat of a “nonfuture” of debt slavery and planetary catastrophe. Currently, the emergent responses to this threat which have seen the fastest-rising discursive success lie on the rightwing and are arguably highly integral to the threat itself: technoneofuedalism, which seeks to strip us back to more tractable social forms, whether military, debt-based, or localist; and (minority) technoescape ideologies, of which transhumanism and the private space flight/colonization industries are exemplary. Patricia seeks to instead pose AGI as a site of intervention for new incentives that can reclaim the task of scalar politics as a brand of ‘good abstraction’, against the presently more typical leftwing response of “complexity fatigue” that tends towards the bad abstraction of asystemic simplification, orienting politicization towards the figure of the “evil CEO”, rapacious individuals and corporations, or even outright conspiracy theory. Here AGI becomes an infrastructure and/or program for autoescalative cognitive and libidinal (re)investment in the problems and goals of human politics, seeking to counter maladaptive and negative-sum epistemic behaviors ranging from the cherrypicking of research within the existing operative matrix of capital power to disciplinary tribalism and autoBalkanization. To elaborate this image of (machinically coupled) political thought, Patricia invokes the epistemic stereoscopy of Wilfrid Sellars, who proposed that most human knowledge-activities integrate two regimes of knowing, namely those of the manifest and the scientific images, wherein the generation of scientific images recursively feeds(-forward/back) into manifest images in the construction of the phenomenon as known. This calls back to the artistic discourse of “site-specificity” qua vehicle of the integration of art and politics. Under this rubric, localities or points exist inside a milieu, a generic connective domain or space, or a medium of ramification. Abstractions become epistemic drivers for generative alienation. If we integrate politics as manifest image with a computational intelligenesis-oriented process of scientific image-making at, in, and as specific sites - such as the European Pirate Parties and their LiquidFeedback digital democracy and political knowledgemaking platform, or the legacy of the Chilean Cybersyn system Eden Medina speaks about later in the day - we can liberate them for the (self-)construction of these globalizing epistemic engines in order to forge a new ground for complex(ity) politics.