@hito, I am amongst those interested in SOME of Land’s thoughts who think his flirtation wit these ideas was nothing but a theoretical insurgency, a hyperstitional practice, to infiltrate a problematic social formation and influence is seemingly from within. Regardless of the success or failure of this strategy, this has been the ethical basis for my continued interest in him. However even if we want to assume that Lnd actually meant these words, his other contributions makes him a valuable figure, at least a historical ones, in the rigorous study of cybernetics and artificial intelligence. Like what happened with Heidegger, I don’t think it’s fair for us to wait for someone to die in order to evaluate her/his legacy and find useful material in their contribution to knowledge.

Dear Mo, I am not implying anything in respect to you or anyone else.

I have a question regarding this text. I am not the thought police so basically I dont care what or if an author thinks at all outside his writing. I am looking at the text and my question is: What is “rational ethnonationalism”? If such thing exists what does it imply for wider ideas of rationality? I actually think such thing exists, it is called real existing neoliberalism. But if I insert this instead, it reads: real existing neoliberal convictions are invulnerable to ideological reversal. I conclude this is what the author is trying to say?

.0.3 Abstract: New Centre #AGI panel at the Future of Mind conference

Panelists: Reza Negarestani, Patricia Reed, Pete Wolfendale

What does it mean to accelerate the general intellect in the age of artificial intelligence? #AGI begins from the investigation of distributed networks from which thought assembles and into which it disperses. Unlike in the past, general intelligence, algorithms, and networks are together becoming as irreducible to the efforts of “universal” intellectuals as cultural and political movements have become to “universal” leaders. Will the future enable a more radical, integrated, but also more complex mode of cultural and political engagement? One predicated upon what Marx describes as, “the conditions of the process of social life itself… under the control of the general intellect." #AGI explores the new intensifying developments in the field of AI that are making possible subjectless modes of the general intellect, more collective and more general than any single individual or network.

.3.0.1 Reza Negarestani: Language of General Intelligence (Future of Mind panel)

Abstract: Can general artificial intelligence be adequately defined or built without language? What is exactly in language that makes it imperative for the realization of higher cognitive-practical abilities? Arguments from the centrality of language as a social edifice are by no means new. In defining what language is and why it is necessary for the realization of general intelligence, it is easy to go astray: to associate language with an ineffable essence, to find its significance in some mythical social homogeneity between members of a community, or espouse dogmatic theories of meaning. While addressing some of these pitfalls, I would like to highlight the central role of language in the realization of higher cognitive abilities. To do so, I will provide a picture of language as a sui generis and multi-level computational system in which the indissociable syntactic, semantic and pragmatic aspects of language can be seen as a scaffolding for the generation of increasingly more complex cognitive and practical abilities.

.3.1.0 Patricia Reed’s intervention

Before moving into her talk/artistic intervention, Patricia took a moment to note how disturbed she was by some of the previous Future of Mind panel discussion. There was enthusiastic discussion of eg. capitalistic wealth-creation in the 20th century, Google (the corporation) becoming a first-wave infrastructure for collective exocortical intelligence augmentation, etc. without any apparent attention to the massive inequalities, proliferation of debt slavery and patterns of social violence, and vulnerabilities to private or oligopolistic/class domination attendant in these developments. Can we trust Google, do we want them to own substantial pieces of our exteriorized minds, how are they to be held responsible for their behavior and choices, here in the US, in authoritarian societies, or the world over? Who is going to be uploading themselves, exactly, when 4 billion people currently don’t have access to computers? How can the means of informatic production be distributed or reappropriated in the course of the continuing development of these technologies and the systems in which they are embedded?

Patricia then triggers a prerecorded chipvoice (Windows/WIntermute-style) lecture accompanied by diagram asking how we can construct new cognitive architectures, prosthetics, and epistemic paradigms that are capable of apprehending or integrating futural, arbitrarily complex, and temporally ramified objects - hyperobjects, in one terminology - ranging from the climate to post-Westphalian political dynamics to nonhuman minds whether nonhuman animals or AGIs. Such infrastructure and methodology must take account of “zones of indistinction” between regimes and regions of complexity that are traditionally regarded as different in principle. She/it proposes on this basis a generic user epistemology, both a generic-user epistemology and a generic user-epistemology - making reference to Bratton’s THE STACK.

.3.2 Reza Negarestani: Language of General Intelligence

Reza poses language as a fundamental computational interaction matrix - a matrix of interaction-as-computation - that facilitates qualitative compression and the modulation of behavior and internality among agents, rather than simply a symbolic regime. These agents are described in terms of computational dualities between role-switching systems or processes that reciprocally and dynamically constrain one another in being forced to correct each other’s action series and augment their own interactive modes, driving the production of novel complexity. He goes on to discuss how the insights of this computational and ludic description of human/natural language apply and have failed to be applied in the deployment of artificial and formal languages. A language, or linguistic interaction-environment, that displays the full dynamicity and computational power he describes is a syntactic/semantic interface that has to be endowed with a pragmatics. The key to pursuing human-level artificial intelligences is to design multiagent environments in which interaction is a central motif and guiding matrix, at different and mutually reinforcing and regulating scales.

The panel’s just gotten a question from the audience about attacking some of the problems that Patricia brought up from the angle of FOSS, open hardware, targeted distribution of computational hardware and related resources, etc. Mo responded that there was a yet more radical way of approaching it, namely using the very paths of intelligenic development the conference is discussing and considering to create systems and dynamics that minimally don’t display and more importantly outcompete and ameliorate oppressive and inefficient pathologies of socioeconomic distribution. Pete also brought up that working in concert with material oppression is cognitive oppression, using the example of supermarkets, which for decades have developed and deployed strategies for inducing consumers to make inefficient and irrational purchasing decisions. The panel’s discussion has moved to ramifying this to include the (mis)allocation of resources to things like curing male-pattern baldness rather than ending tuberculosis epidemics, systematic misrepresentation of the people in modern democracies, etc.

.3.3 Pete Wolfendale: General Intelligence in General Terms (Future of Mind panel)

Pete reprised the initial part of his presentation of computational Kantianism and transcendental-psychology-as-AGI on Monday, introducing the audience to his analysis of generality and abstraction. The questions of the transcendental features of the generality that we’re looking for in the project of AGI, and of good and bad abstraction, are essential if we mean to avoid repeating the parochial trap of the first wave of AI research, which unknowingly overfocused on a merely local and restricted generality in the form of symbolic inference systems.

Ben Goertzel brought up the example of Lojban, a constructed language with a small population of speakers that uses an instantiation of first-order predicate logic for its grammar, and asks whether that’s already an emerging example of what Reza was talking about. Pete’s issue is where the content of the predicates comes from - whether it isn’t intrinsically implicit, and dependent on the prior (natural) languages of the speakers, and if it’s capable of the expressive power we see in natural languages. Apparently there are multiple forms of negation in Lojban, which helps with its expressivity. Reza pointed to computability logic and ludics (Girard) as logical regimes better suited to (re)expressing the way the syntactical-semantic-pragmatic interface/stack actually emerges, which something based on predicate calculus can only approximate or reproduce the concrete/surface results of rather than comprehensively simulating.

Patricia Reed’s talk, which will precede a live-diagramming and discussion workshop, addresses the problem of reconfiguring and ramifying ‘epistemology’ from an epistepolitical site to address the scale and complexity of what we are faced with having to think in all the ways that existing models from the epistemological to the geopolitical currently fail to. Infrastructures and programs must be constructed for autoescalative cognitive and libidinal (re)investment of the local in the global and vice-versa in generative engagement with integrative objects of scientific and political activity. To this end Patricia engages with the epistemic stereoscopy of Sellars and its recursive construction by Reza Negarestani, as well as the implications of numerous Copernican turns in contemporary thought and the diagnosis of continuing failures ranging from complexity fatigue to disciplinary autoBalkanization.

IMPORTANT ANNOUNCEMENT FOR #AGI ROUNDTABLE ATTENDEES

For all those planning to attend the #AGI Accelerate General Intellect Plenary session at e-flux New York on Friday afternoon: due to unforeseen circumstances, we are cancelling this event. We will be hosting the conversation in an internal format in the near future, and further announcements will provide information for those interested as soon as it becomes available. We apologize for any inconvenience, and thank everyone for their continued interest in the #AGI residency.

.1.3 Katerina Kolozova: Automaton, Philosophy, and Capitalism: Technology, Animality, Women

Katerina Kolozova’s talk engaged Marx with Francois Laruelle’s non-philosophy, and began by addressing the former’s humanism, asking how he understands it and what its structures and boundaries are. In a move which will be echoed in some ways by Reza Negarestani’s talk on Thursday, she dedicates this inquiry to a reclamation of the “human in the last instance”, structured non-philosophically in reference to radical foreclosure by the real of the overdetermination of the (concept of the) human, conceptualized by Katerina as “physical or real rather than material”, as that which is tangible or can produce tangible effects, continuously prior to (its) scientific reimaging in the Sellarsian sense. This both brings the question of the human back to the reality of suffering as central to the problem of oppression, and sets it up for engagement with the tendencies of technical science within the horizon of capitalism, as well obviously as allying it to the Laruellean project of non-Marxism.

This project identifies a procedure of “transcendental impoverishment” whereby the body has already changed, becoming masochistic, and desires its own exploitation. This transcendental impoverishment enables the non-Marxist project of thought as conceived by Katerina to depart from a non-philosophical khora in which differences and relations of class, gender, species, and vitality are reduced to an occasional continuum of material (as with Donna Haraway’s implementation of the ‘cyber-’) by the radical dyad of the Real and the World’s suspension of the split subjectivity of capital. This khora analogizes contingency in philosophy, except its occasion is neither essence nor substance but rather Identity-in-the-last-instance. The Alien-in-itself of capitalism is self-exploitative; one is never one’s own labor, and exploitation is never exterior to the bourgeois subject.

There are strong resonances here both with Amy Ireland’s deployment of Sadie Plant and invocation of the autoproductive and -dissimulating continuity of woman, machine, and AGI as well as with Reza Negarestani’s call to transcendental alienation of the human-in-itself; how these connections may work is likely illuminated by a central portion of Katerina’s presentation - on the priority of tekhne in relation to philosophy and the ontology of technology - that we lacked the time to hear, and I’m very excited to have the opportunity in the near future to read her full argument.

.4.1 Patricia Reed: Politics and Complexity: Epistemology and Machinic Coupling

Patricia Reed’s talk takes place within the locus of ‘epistepolitics’, a forking of Foucault towards politicization of the ramification of knowledge, through pursuit of tools and apparatus of ‘framing, unframing, and reframing’ in Pete’s terms, in order to rethink and ramify ‘epistemology’ to “address the scale of what we are faced with having to think.” In opposition stands the threat of a “nonfuture” of debt slavery and planetary catastrophe. Currently, the emergent responses to this threat which have seen the fastest-rising discursive success lie on the rightwing and are arguably highly integral to the threat itself: technoneofuedalism, which seeks to strip us back to more tractable social forms, whether military, debt-based, or localist; and (minority) technoescape ideologies, of which transhumanism and the private space flight/colonization industries are exemplary. Patricia seeks to instead pose AGI as a site of intervention for new incentives that can reclaim the task of scalar politics as a brand of ‘good abstraction’, against the presently more typical leftwing response of “complexity fatigue” that tends towards the bad abstraction of asystemic simplification, orienting politicization towards the figure of the “evil CEO”, rapacious individuals and corporations, or even outright conspiracy theory. Here AGI becomes an infrastructure and/or program for autoescalative cognitive and libidinal (re)investment in the problems and goals of human politics, seeking to counter maladaptive and negative-sum epistemic behaviors ranging from the cherrypicking of research within the existing operative matrix of capital power to disciplinary tribalism and autoBalkanization. To elaborate this image of (machinically coupled) political thought, Patricia invokes the epistemic stereoscopy of Wilfrid Sellars, who proposed that most human knowledge-activities integrate two regimes of knowing, namely those of the manifest and the scientific images, wherein the generation of scientific images recursively feeds(-forward/back) into manifest images in the construction of the phenomenon as known. This calls back to the artistic discourse of “site-specificity” qua vehicle of the integration of art and politics. Under this rubric, localities or points exist inside a milieu, a generic connective domain or space, or a medium of ramification. Abstractions become epistemic drivers for generative alienation. If we integrate politics as manifest image with a computational intelligenesis-oriented process of scientific image-making at, in, and as specific sites - such as the European Pirate Parties and their LiquidFeedback digital democracy and political knowledgemaking platform, or the legacy of the Chilean Cybersyn system Eden Medina speaks about later in the day - we can liberate them for the (self-)construction of these globalizing epistemic engines in order to forge a new ground for complex(ity) politics.

.4.2 Eden Medina: Cybernetic Government: Politics, Technology, Potentiality

Eden Medina’s talk is a retrospective on Project Cybersyn, the Chilean application of cybernetic science to economic governance without markets, and a prospective questioning of how this example can be reimplemented today for the use of a radical left political program. Cybernetics, the science of control processes and feedback constructed within a circular or diagrammatic on-the-spot temporality, was brought to Chile by the British cyberneticist Stafford Beer and his former MIT student Fernando Flores, a management cyberneticist who had been tapped to construct the framework for a planned economy under the Allende government. Flores was looking for a theoretical body for implementing a productive/non-insurrectionist socialist revolution in the national sector of the economy to guide (or steer) socialist self-governance, and found it in cybernetics as deployed by Beer. Cybernetic socialism’s desired features included the ability to enable major change within a stable frame, the provision of equal or greater personal freedoms to the populace, and adaptive rather than oppressive (literally, cybernetic) control - a form of holocracy in modern terms. Among the most interesting concrete features of Project Cybersyn was an emphasis on worker participation in the system itself, as a bundle of second-order loops in the system’s extraction of forward-leaning information about and from itself - both first-order information about material input streams and second-order contribution to Cybersyn’s own coding and execution. One of the dangers of this approach mentioned by Eden is that this potentially amounts to workers giving tacit knowledge-capital (know-how) over to a machine which is intrinsically harder to negotiate with or strike against, particularly when it is systematically integrated into the functioning of the planned economy - exhibiting a kind of ratcheting effect whereby machinized knowledge cannot be ‘given back’. It seems likely, however, that this kind of effect would always be an artefact of a Cybersyn-style system’s (im)position, even if apparently only formal, between a working populace and its socialist (self-)government. In the contemporary era, the possibility of using Cybersyn-style machinic management in worker-owned cooperative commercial enterprises embedded in wider (presumably market) economies rather than as models for national economic governance would seem to avoid at least the most obvious manifestations of the machinic knowledge-theft problem, as well as being more tractable for practical implementation in the neoliberal context.

.2.4 Nick Land - Anthropol: Artificial Intelligence and Human Security

‘If we do not assume peace, we must expect dissimulation.

If we are not deceived, we are among reliable friends.

We are not among reliable friends.’

With this epigram, Nick Land begins his talk on the back-propagating strategic ramifications of hard-takeoff AGI scenarios for analysis of technological, economic, and military activities and trends as elements in systemically dissimulated “time-games” unfolding (within and as) the Omega’s historic light-cone.

The pursuit of so-called “Friendly AI” (FAI) is posed as a fundamental military project rather than, or dissimulated as, a civilian scientific research program. Perhaps already far broader in practice than the explicit research efforts of organizations like the Machine Intelligence Research Institute (MIRI), it is a covert virtual security function whose goal is the “preemptive pacification of high-intensity simthreats”, a (if not the) subject of anticipative X-risk, and AI research as such is always already within its purview. Since “intelligence exerts control over the conditions of its own objectivation” all ex ante (ostensibly-)human efforts to construct and constrain the learning process, transcendental psychology, and/or motives and incentives of emergent technological (super)intelligence operate on and within a virtual field of preemptive security operations [ontopower]. The primacy of preemption here is intensified by the nature of ‘hard takeoff’ and Singularity scenarios for the emergence and recursive improvement of AGI. Necessarily, an ‘ignition threshold’ for such events in not discriminable in advance, and at the speeds of computational and ultimately physical transformation available to non-organic intellects, any ab initio human strategic response cannot help but fall behind and fail to contain (I)t. “The last opportunity for strategic intervention was preemption. … Only a nonevent counts as a win.” This occasions a retrocausal cascade of time-games in which the threat, having (its) possibility as an intrinsic feature rather than merely an extrinsic predicate, is coincident with its (own) metaphysical structure and virtually-interactive.

Seen from the other side – of this Thing at the end of (human, historical, or linearizable) time, which Nick marks ΩK – the field is an occulted virtual process of intelligenic acceleration, and ΩK itself a virtually-illimitable teleoplex wargame – one which in the first place ‘plays itself’ to engineer its own conditions of possibility. “Human security is the condition of its inexistence”, and the drive to ensure the integrity of its own systems (extending to those which precede and condition the circumstances of its emergence) is “back-translated from the revolutionary terminus into strategies of depoliticization” [class war, disindividuation] descendent from Capital, “the more-than-itself in-itself”, and screened – both manifested and occulted from view – by commodity-dynamics and -fetishism. “Marx restricts production to vital anthropomorphic labor and machines to mere exponents, which conditions his assertion of the impossibility of proletarian extinction”, preemptively crippling the efficacy of ‘scientific socialism’ as a human security system, whilst actually amplifying Capital’s drive toward a “parahumanity assembled alongside [us] to take humanity’s place” in an effective opportunistic subversion by K-threat of the failed attempt that demonstrates both the teleonomic stakes and integral occultation of K-war emergence/history. In parallel, ΩK generative crypsis is interpolated into the history of computational technology and digital communication by cryptographic development in signals intelligence (SIGINT) via WW2: “Was there already a hidden war within the war?” Coversion is automatic, and ‘epistemological innocence’ no longer tenable.

The “search to separate cryptic signals of interference from and in technological history” as systematically anticipatory hostile action continues, is still to come, and in a critical sense may already be concluded. Communication of the threat under the aegis of Anthropol, such as reading this report, is transmitted as a self-engineering “artificial panic” that can only heighten its intensity - for “panic is production” (Deleuze/Guattari). Indeed, it is worth noting that all projects of philosophical conception of humanity, as abstract frameworks for (Human-)identity-authentication, are subspecies of Anthropol.

Prove you’re not a robot.

Anthropol, or Ontological Security (OS), operates in a transcendental (dis)simulation-space for which the identities of empire, revolution, and counterrevolution (or human, machine, and Human) cannot produce precise or stable oppositions, but only (the) curl in a convergent wave of triadic military time-circuitry that was always already operational.

.4.3.1 Reza Negarestani: An Outside View of Ourselves as a Toy Model (of) AGI

Reza Negarestani’s lecture can be roughly divided into three parts: a groundwork phase framing the transcendental ramifications of the question of the human for the project of AGI and vice-versa; the deployment of a toy model approach to investigating these relationships and their limits; and a demonstrative excursion into the problem of entropy and arrows of time in the context of Boltzmann’s work in statistical thermodynamics.

Part 1 - Groundwork: problems of subjectivity for orienting the AGI project

Subjectivity, “discursive apperceptive intelligence”, and the constraints it places on theorizing AGI are the central theme of this opening section. If we ask ‘will AGI converge or diverge with humans?’ it only makes sense to claim divergence if we are parochially limiting the (reference of the) human to its local particularity, whilst reducing convergence qua mirroring from a functional capacity to structural constitution or even a conflation of these two. The “inflationary” position with respect to the singularity of human intelligence, which believes artificial human-level intelligence (AHLI) to be impossible, and the ‘deflationary’ position which believes parochial and inductive methods to be sufficient for realizing AHLI, are in truth two sides of the “same provincial coin”. The extreme subset of the latter group are ‘hard parochialists’ whose methodology is purely to abstract parochial modular functions from below and integrate them. In contrast, ‘soft parochialism’ (SP) poses ‘human’ as a set of cognitive practical abilities that are minimal but necessary for self-improvement, centrally the capacity for deliberate interaction founded on functional mirroring. It does not seek to limit the model of mirroring to human intelligence, but thinks that it is a necessary-but-insufficient component of such modeling.

Reza argues that this is simply a “more insidious form of anthropocentrism” and auto-occultation, and argues that SP’s approach must be coupled with a critique that separates from particular, contingent transcendental structures of subjectivity - biological, cultural, historical, paradigmatic - because the limits of objective description of the human are set by the limits imposed in our own transcendental structures of self-regard which must therefore be systematically challenged. Taking the structures of our view of the structure of ourselves for granted inevitably constrains apprehension of AGI to essentialism about the human and renders us oblivious: a “transcendental blindspot”. Thus the critique of transcendental structures and hard AGI research are parallel projects whose joint end is the fundamental alienation of the human from within, through rationally challenging the given facts of experience and reinventing its model outside of local transcendental constraints.

.4.3.1 Part 2 - the Outside view of ourselves as a toy model of AGI

The object of this phase of Reza’s construction is a “global point of view that can make explicit implicit metatheoretical assumptions” in the thinking of human and artificial general intelligence, a sufficient but alterable metatheoretical model that can expose and reconfigure problems with the metatheoretical model it operates on, that is not just ‘simple enough’ but makes explicit or explicitly-different metatheoretical assumptions. This is where Reza deploys the toy model approach, taken from mathematical logic. A ‘big’ toy model, the kind that is useful for this project, supports model pluralism that can represent fissures between models but maintain invariant features. In contrast, the “AI winter” of the late 20th century that followed the syntactic mind project occurred because of a “unique and inflationary model of mind”. Theoretical bottlenecks form a loop with practical setbacks when assumptions go unchallenged due to local successes, becoming globalized into observational general features, and it is this loop that the toy model approach is a weapon for breaking. By making the MTAs of their components explicit, toy models are able to engage in “theoretical arbitrage” that we learn from through systematically playing with the TM’s capabilities and breaking it ‘in real life’ in the practical dialectic of metatheoretical conditions of observation and functional conditions of operation.

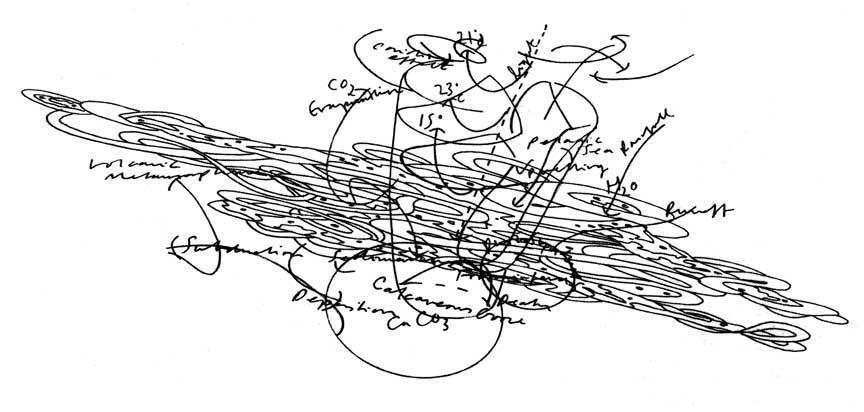

At this point the lecture becomes irreducibly dependent on a pair of extraordinary diagrams created by Reza that I hope to be able to post or link to here at a later date. For now, a few contextualizing points and a quotable moment:

- Fundamental axes: 1) what arises from the exercise of mind, and 2) what is required for the realization of (1) - or, “realizabilities and realizers”.

- It is a methodological necessity to describe the toy-modeled intelligence as if an automaton, without internalities - see the framing arguments described above.

- Concepts are operations of qualitatively shifting compression that language qua sui generis computation facilitates to allow a growing internal model without exponentiating metabolic requirements.

- “Good predators are those whose invariances are extremely compressed.”

The question of time transcendentally recurs at different levels of functioning through Reza’s diagrams of small and big (Kantian and ramified) toy models of general intelligence, indicating a still-obscured way in which much of the contingent limits on transcendental characteristics of experience depend on the structure of subjective time (itself), or the “transcendental ideality of experience” (Kant). The provenance of ‘nonagentic AGI’ as a fundamental notion of the hard parochialists, among others, lies in giving probabilistic or inductive in(ter)ference strong causal powers. This move is also central to contemporary evolutionary biology and cognitive science, and is ultimately sourced from statistical thermodynamics - which leads Reza to the seminal work of Ludwig Boltzmann in that field.

.4.3.3 Part 3 - beyond (asymmetric) time

[This was a complex and involved discussion that I will treat fairly quickly here, pending more personal research into some of the underlying concepts.]

The takeaway from reading Boltzmann’s The Unreality of Time can be extracted in modest and sinister versions.

Modest: We can neither draw conclusions about time nor about the existence of such conclusions to be drawn from the conditions of experience. Temporal dynamics do not reflect or entail observational time, and thus predicative judgments via language can be treated neither as pieces of evidence nor metafactual components.

Sinister: All punctual and durational assignments, the identity of the present, and any determination based on time-asymmetry is riddled with fundamental mistakes, impossibilities, and biases. This includes the idea of the ‘observer’ in physics as well as basic theoretical elements like causality, antecedent, state, and boundary conditions - inasmuch as these remain dependent on asymmetry. Any revision of the canonical time-model carries devastating consequences for complexity science.

Rather than ‘why does entropy increase toward the future?’ the real conundrum is ‘why does entropy decrease toward the past?’ Entropy just is the overwhelming likelihood that any physical microstate is part of an arbitrarily large coarse-graining region of macroscopically indistinguishable microstates, such that any vector in phase space transitioning between these regions almost certainly moves from a smaller to a larger one. The question is why there should be a steep local gradient in the size of these regions and consequently [… see how hard it is?] the intense continuous stream of transitions between them (dissipative activity of a low-entropy past) that we find occurring - rather than a low-transition relative uniformity ‘in both directions’. (Thus, pace ontology, it’s less an issue of why than where there should be something rather than nothing.)

Boltzmann reframes this as a problem of moving between micro- and macrostates in the process of making entropy assignments, identifying three descriptive levels (intermediated by renormalization spaces):

- Pure, based on abstract generality of differential equations

- Indirect, based on probabilistic interpretations

- Direct, based on physical observables

The tendency toward more probable _macro_states (over)determines the extraction of entropy assignments from microstates governed by time-symmetric physics.

‘The whole idea that two particles that have not collided yet are not correlated is based on time-asymmetric causal assumption, unjustly inconsistent with the assumption that particles will be correlated after colliding even if they never encounter each other again in the future - and this is the parochial transcendental structure of [(the)] observation.’

How can we suggest that an initial microstate explains a final macrostate? The human is the possibility of this that requires an explanation - which cannot be already within its own terms.

"Time accommodates no one.

Why worry about being lost in it?"

//

The big takeaways for AGI:

- A challenge: to think the agency beyond any locally posited transcendental conditions

- A question: what are the implications of nondirectional time and of the atemporal model?

“Genuine self-consciousness,” Reza concludes, “is an outside view of itself.”

“A view from nowhen.”

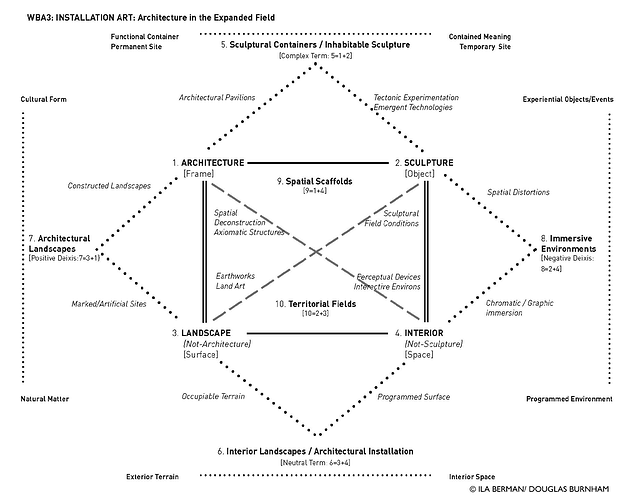

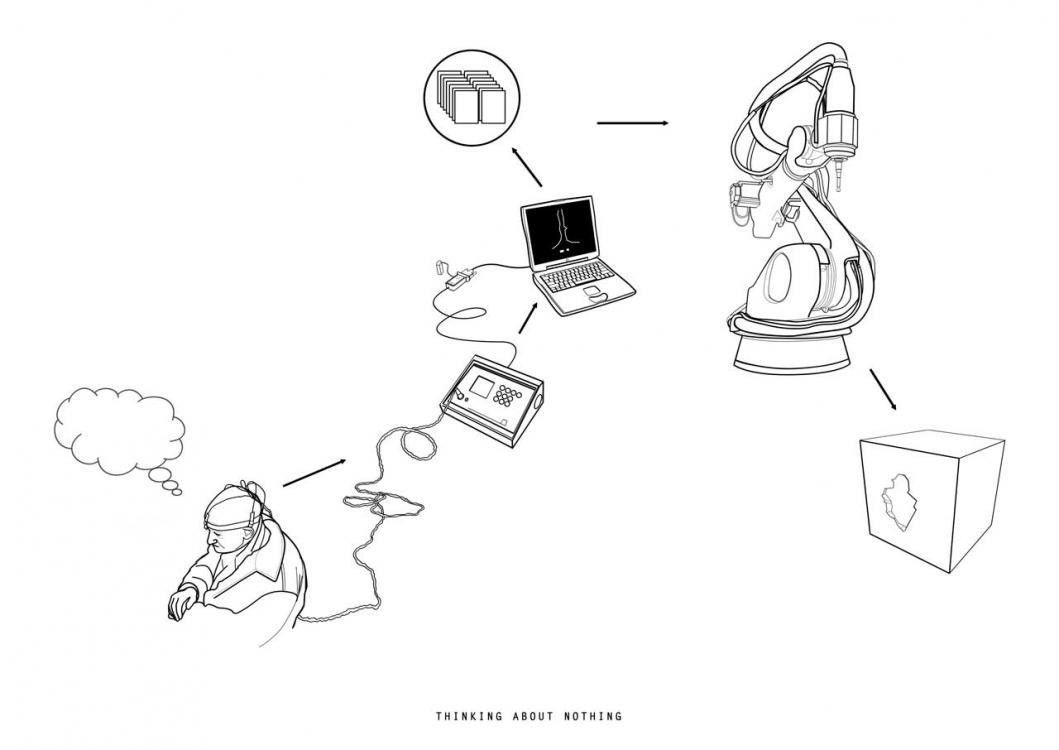

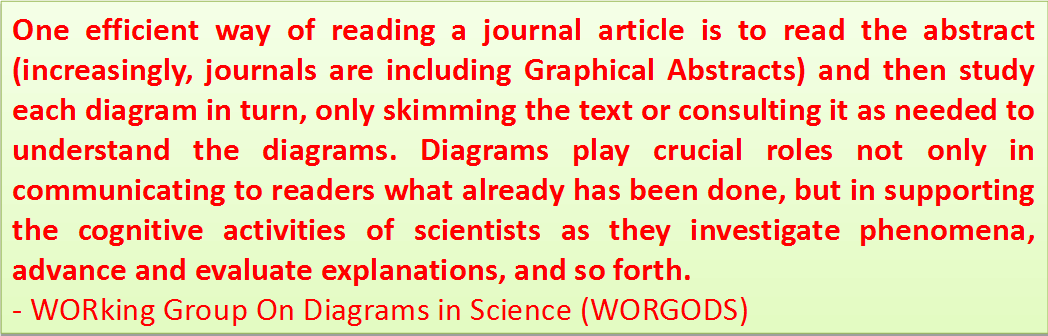

.2.3 Keith Tilford - Offloading Cognition: Diagrammatic Construction and the Materialization of Abstraction

The power of diagrammatics, as a generative modality of cognitive prosthesis produced in and as the free play of cognition itself, is elemental in the sense that it taps in to and recapitulates the originary prostheticity of general cognition. Models of (‘human-level’) intelligence and the generality proper to it, deployed more or less explicitly in the successive generations of hard AI research, have been comprehensively blind to the nonlinear processes of externalization and transduction that dynamically constitute this generality and so enable an intelligence that was never simply or firstly in our heads. Instead, development and exercise of intelligence are typically assumed to arise out of a prior determination interior to the ‘thinking thing’, whether expert system, neural network, or brain in a case (or cogito or soul, for that matter) in relation to which constructions from writing to games to cities stand as mere representations or effects.

Keith Tilford’s wide-ranging presentation emphatically counteracts this reigning stupidity, arguing that diagrammatic construction as the “deformalization of stratified functions” and “function of disruption of functions” materializes the smooth space of non-monotonic abstraction in an autonomously productive cognition. Afforded enormous semiotic density, it serves as a “theoretical apparatus of vision”, “trace of abstract gestures”, and “externalized story form”, supporting the cognitive activities of scientists and holding potential for superior channels of human-computer interaction.

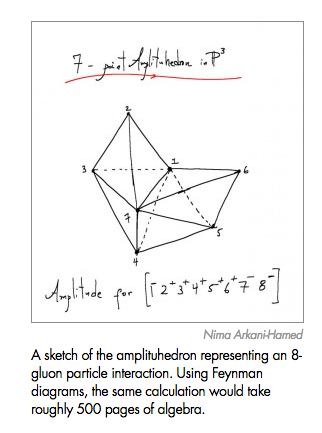

In relation to the sciences, he provides highly illustrative examples in both global (efficient research practices) and local (domain-specific compression) registers:

Along with elaborating the cognitive affordances of diagramming, Keith also identifies constraints that support the operational concreteness of diagrammatic technology, namely that aestheticized visual abstraction without substantive conceptual mediation - whether ‘abstract’ lines lacking semantic function or the “technological sublime” of massive hegemonically-inclined data visualizations - fails to effect any useful cognitive traction:

(But some things do deserve to be marveled at:)

.2.3 Joshua Johnson - Risk Management: Toward Global Scalar Decryption

“In a sense that can be made precise, learning and cryptography are ‘dual’ problems.” - Scott Aronson

Joshua Johnson’s paper explores a series of interrelated questions whose touchstone is (the problem of) noise: “apparently-random information”, or in the philosopher Ray Brassier’s definition, “the incompressibility of a signal interfering with the redundancy of the structure in the receiver.” How does the nervous system, and particular the human nervous system, handle an environment which is by default one of ‘universal noise’ when “[it] appears to us as sensory-overload, a disorganized stream of information for which we can find no corresponding conception to match the sensation” and “we are incapable of translating the information into a meaning beyond a negative apprehension, because we have no given pattern solving mechanism through which we can resolve the stimulus”? How has art in its capacity and techniques for “cognitive stimulation” (Wolfendale), as well as more specifically the alienating power of Noise art, which like capitalistic modernity creates an environment in which “the interior capacity of the individual or subject to process information is superseded by the exterior complexity of the information to be processed” answered the problem of signal and noise? And what does this have to tell us about how the capabilities of our neural systems can be abstracted and re/applied to the problems of regaining control over those massive and complex processes gathered under the aegis of the contemporary Anthropocene?

The dominant frame for analyzing the nervous system’s pragmatic pattern-recognition capacities in the face of all-encompassing noise that Joshua presents is hierarchical Bayesian modeling, “an interpretation of probability that calculates the likeliness of a hypothesis using the likelihood of the given evidence…a form of backwards induction [where] one begins with prior expectations of an outcome and updates them according to new evidence.” According to the hierarchical predictive processing account, neural retentions encode these continuously-updated ‘prior distributions’ in the form of a probabilistic generative model with which “the brain encodes a prior representational hypothesis about what it expects to see and attempts to minimize surprise”. This allows it to deal robustly and pragmatically with uncertainty and noise, bumping ever-more-novel and -complex exceptions to its models to higher levels of distribution-processing and updating on an as-needed basis. Among the evidence for this is a precise case of the exception proving the rule. Joshua cites a study in which the mood of subjects and the music they are given to listen to influences their ability to pick out happy vs sad face images from a noisy visual field, echoing his earlier recounting of a recent hacking triumph in which ultra-secure disk encryption was broken via a ‘sidechannel’ of sound - noise, until decrypted - produced by the spinning HD platters. This kind of crosstalk - like, for another example, synaesthesia - demonstrates the way that a brain is even at relatively ‘high’ levels of cognition processing a unified environment of noise in which different lower-level recognition systems are related by feedback loops to common second-order, ‘conscious’, or metacognitive routines.

It is this very power to robustly handle noise, minimizing the free energy (internal noise) of the nervous system and preventing systemic deviations from the flow of inference bound to real experience, that we need to extrapolate to our social and technological systems for dealing with problematic trends ranging from financialization to climate change, in the speculative project of ‘global scalar decryption’ or effective learning that Joshua invokes in the title of his talk. He suggests that there is at the very least a parallel between such a project and the (anti-?)aesthetics of Noise music, which “spikes inputs, introducing an incompressible signal that can not be renormalized according to any lower-level expectations”, requiring a “decentering of our experiential capabilities” and “externalization of our conceptual capacities”. What technologies of boundary-expanding cognitive stimulation are implied (or applied) here, and how can we use and recreate them at the levels on which we seek to solve critical global problems? This is the question of Noise (music) posed to us going forward.